Zoom Virtual Agent - Bot testing framework

There was no way to do end-to-end test with bots without publishing the bot to your site. This caused a lot of friction and mistrust in the product, and lack of clarity on what is being shown to end consumers.

Overview

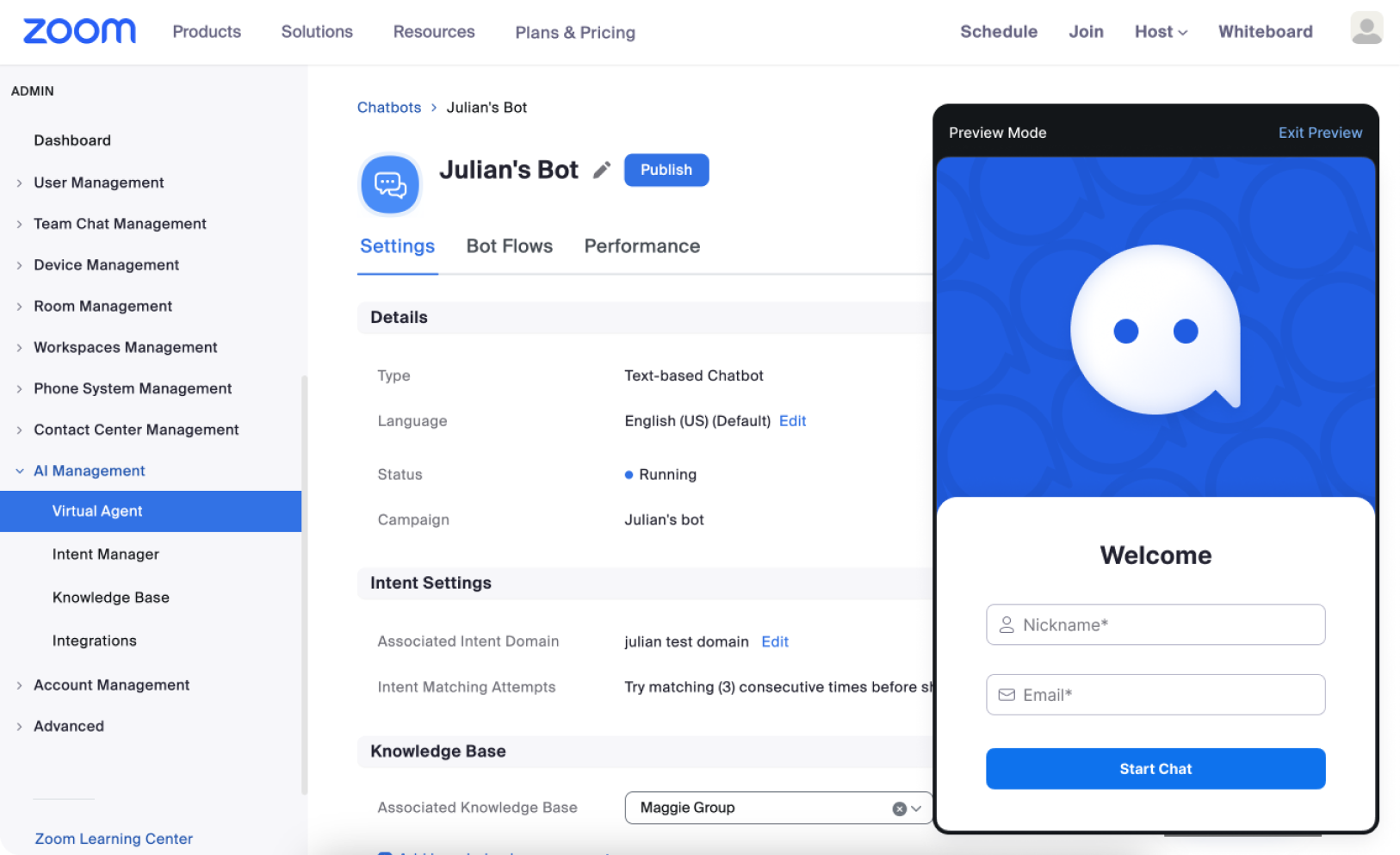

The Virtual Agent offering from Zoom is an AI chatbot-as-a-service which allows customers the ability to build custom bots to help their customers get the help they need - whether that is support, sales, internal help, or any other use case.

A crucial part of the bot experience is testing the bot before publishing. This allows two things:

1. The bot looks and feels how you want it to reflect your brand.

2. It let’s you know that the bot is surfacing the correct information, and that you can trust the product with your customers.

The Problem

A testbot existed, which could generally test the AI models within the chatbot, but there was no indication as to WHY things showed (or didn’t), crucial functionality was missing such as connecting to support, and there was no easy way to test the end-to-end experience before publishing the bot.

Customers and internal contacts had to create very time consuming workarounds to get the end-to-end testing done, and there was no way to understand why the bot was functioning the way it was. It was a complete black box.

I needed to learn more about the problem and opportunity space to completely understand the topic.

Discovery Research

We needed to learn more about the problem/opportunity space in order to determine the issues, as well as to help determine the scope of the project.

In order to do so, we did 10 interview sessions with a wide range of personas - which included technical bot builders, professional services, customers, pre-sales, and more.

We also looked at 10 different competitors to understand their current testing functionality. This was not to inform us of what we should build, but to help us understand industry standards and optimal patterns.

Discovery Findings

Findings from the discovery research yielded some common themes.

An understanding of why the bot is answering how it is on a detailed level was non-existent, which led to frustration and hopelessness with the current product.

There was a lack of trust before publishing the bot due to lack of brand customizations and ability to preview, which was leading to massive workarounds in order to preview the bot.

Testing support functionality was impossible to do without publishing the bot, which also greatly diminished trust.

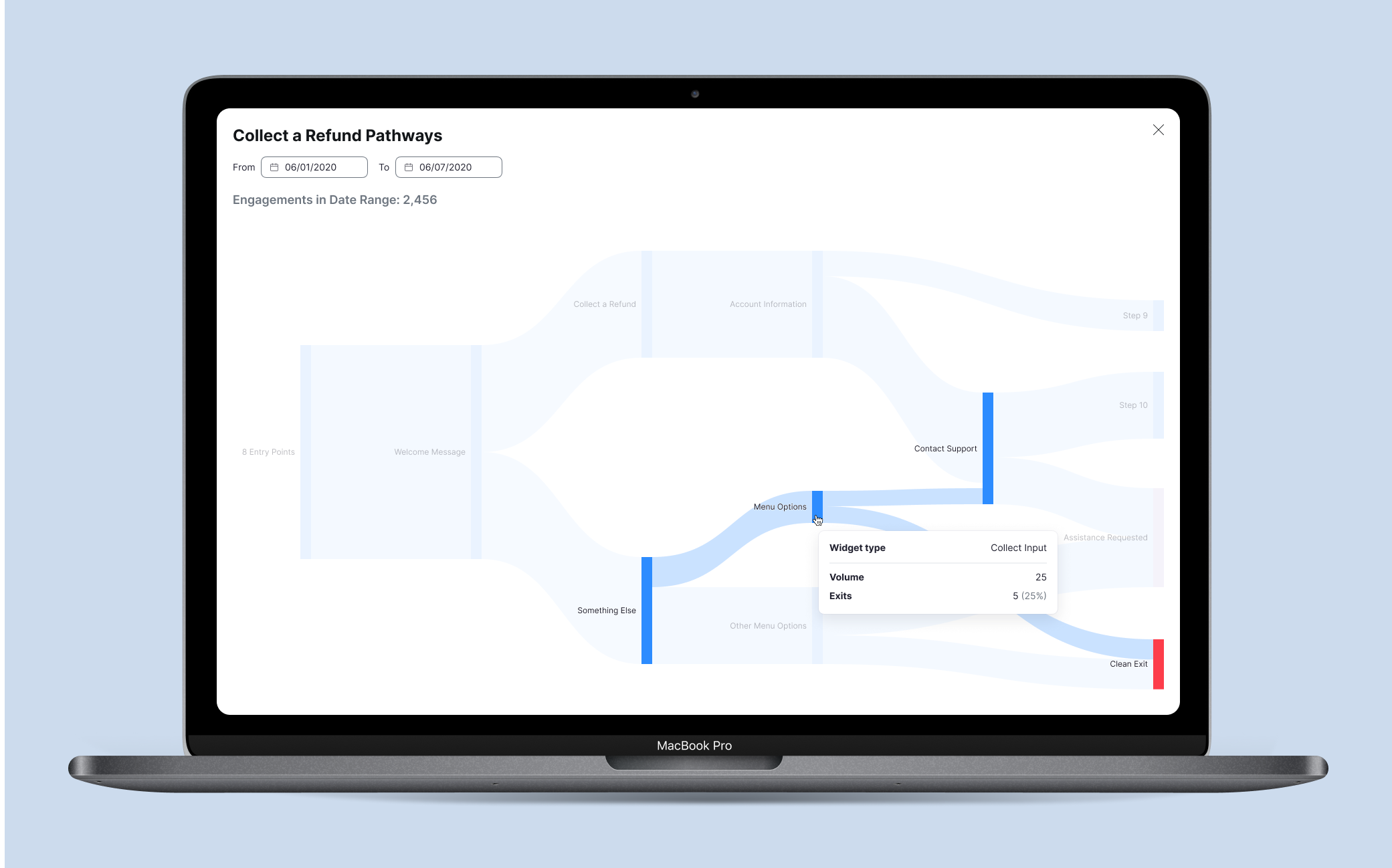

There was no way of knowing which bot flows were utilized, or what user behavior there was within the bot.

After presenting the findings with my greater team, we were able to focus in on some areas for improvement:

End to end testing

Detailed bot testing view

Flexible visual testing

Future proof bot testing

Design Solution

After synthesizing and presenting my findings to the greater team, I was ready to start coming up with some design concepts. What we came up with:

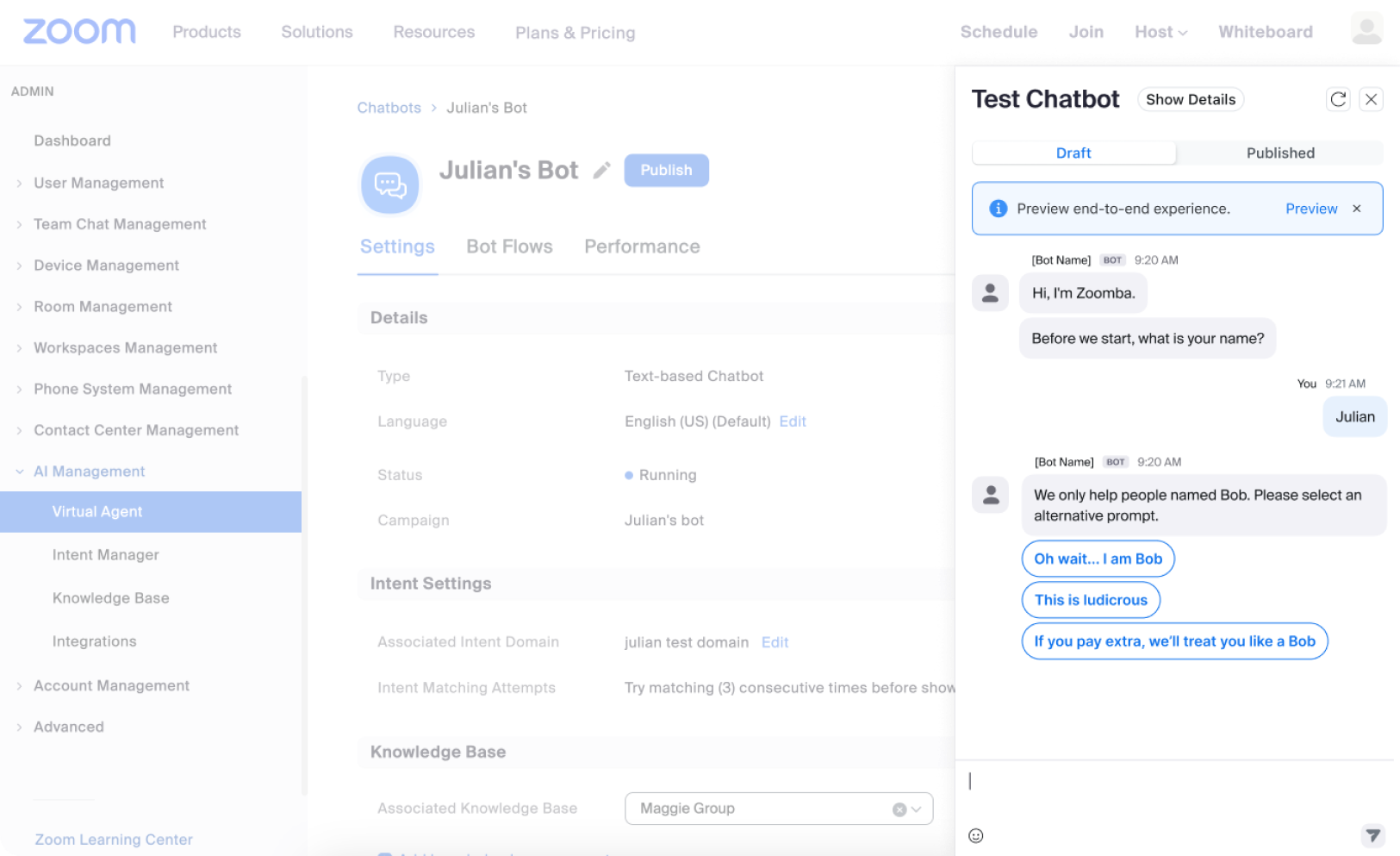

Create a framework to support new bot types that are coming out soon.

Allow flexible end-to-end testing, so testing can be done at anytime in the bot development process - from beginning stages to UAT testing.

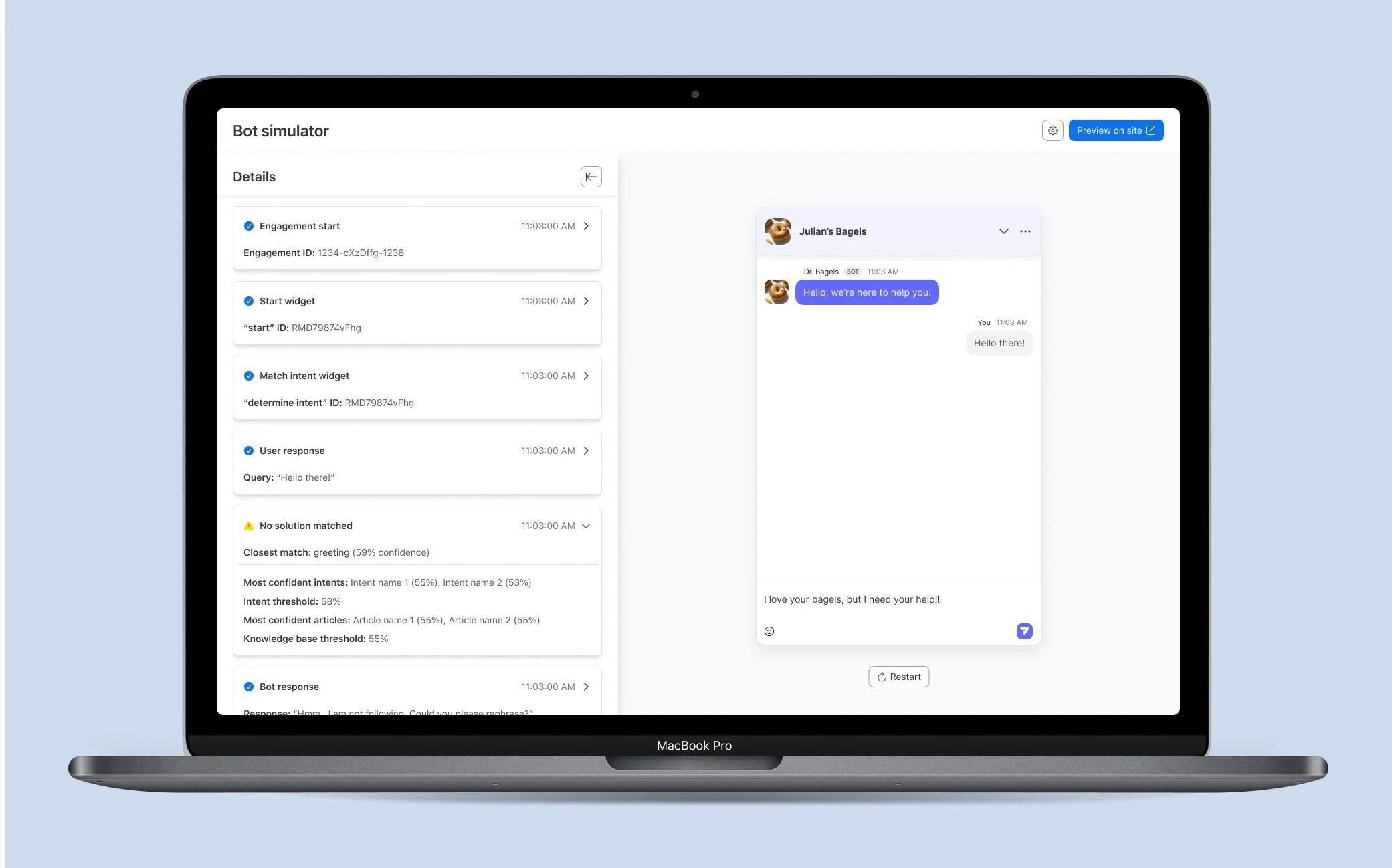

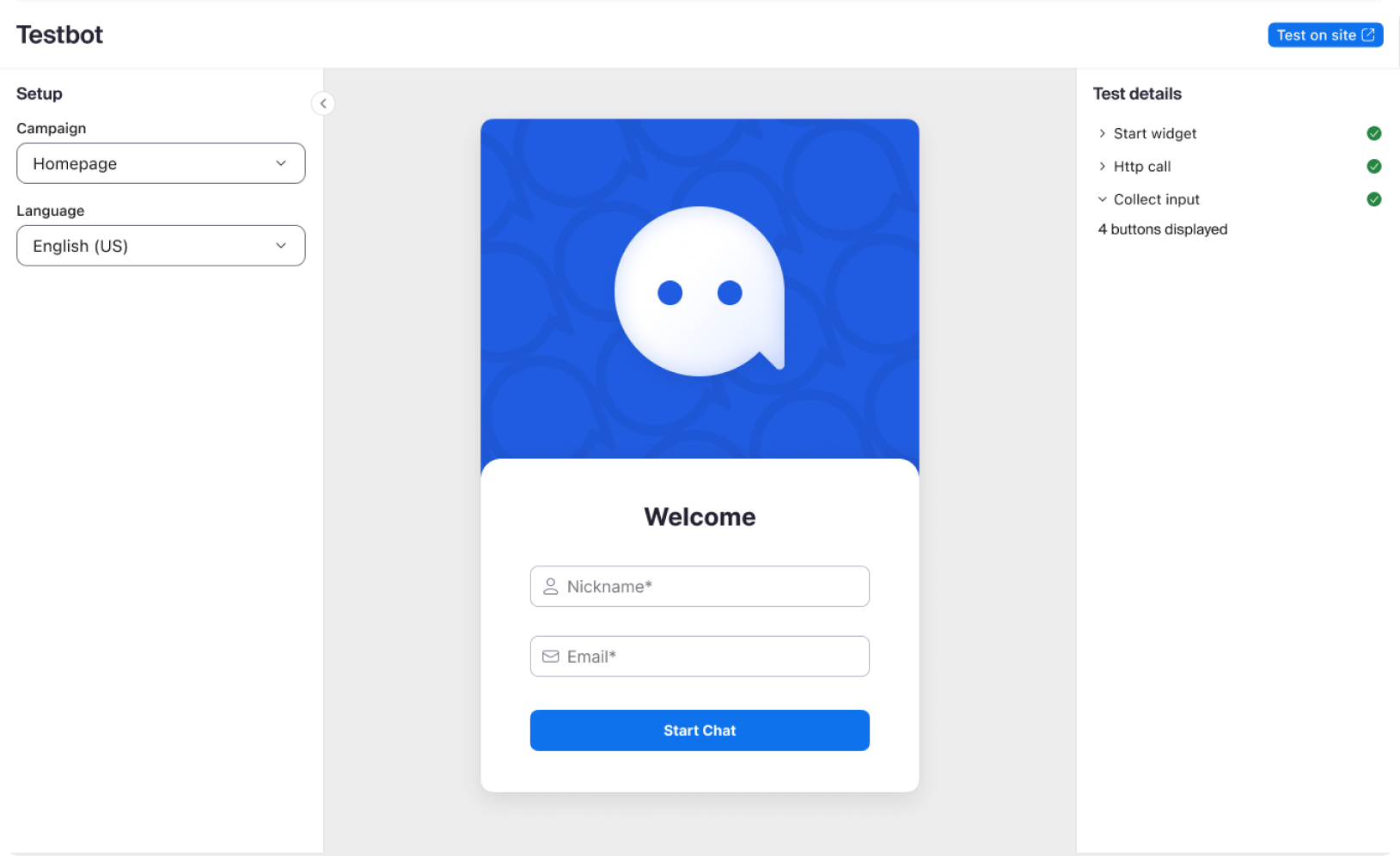

Create a detailed view of the engagement as you test, so you have a complete understanding as to why the bot is responding the way it is, and what you can do to fix it.

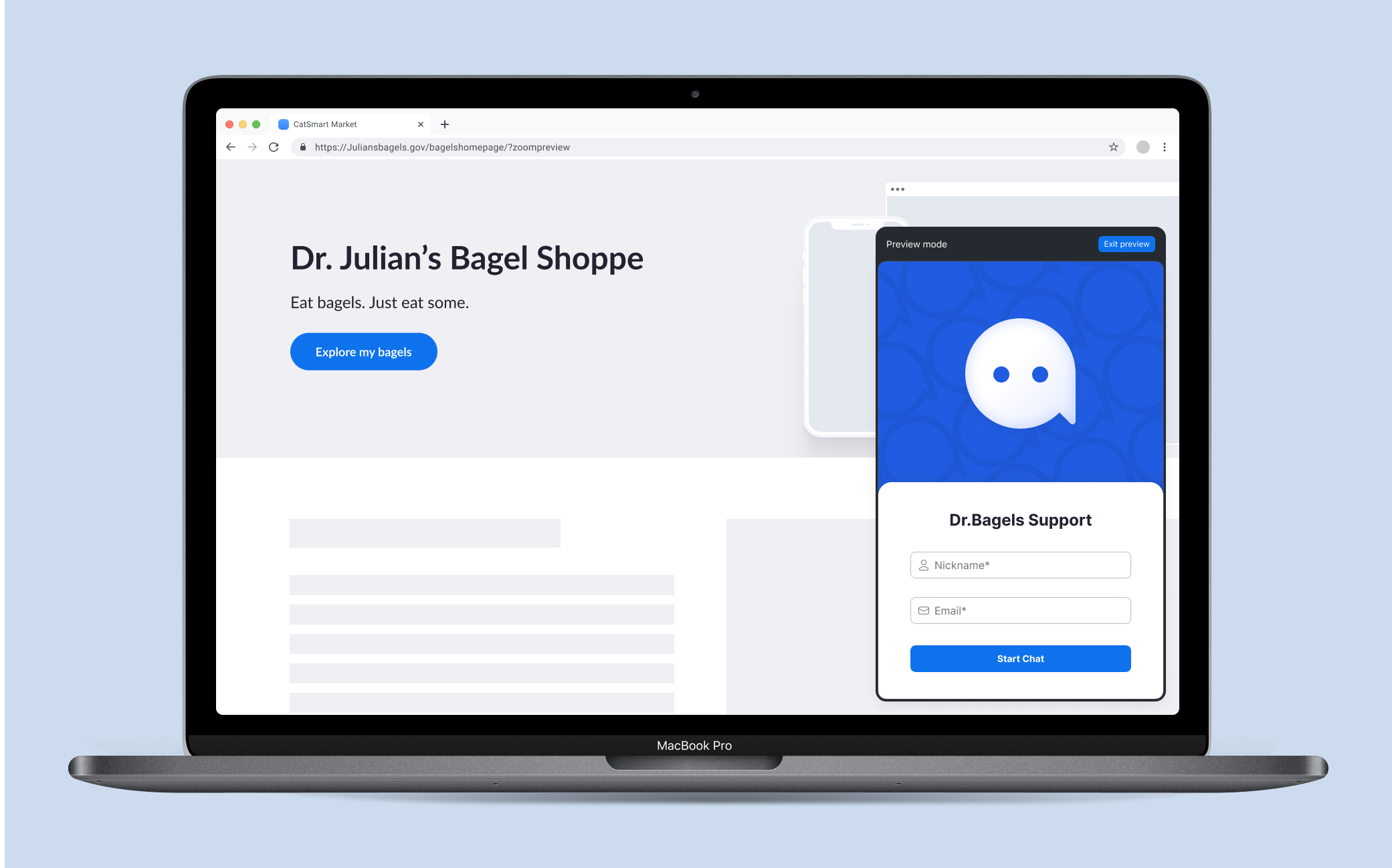

Powerful and simple visual previews of the bot, even before a site is available to test on.

Design Explorations

Design Validation

In order to ensure that we were building the right feature and that we weren’t missing any important aspects, I conducted another round of testing. I interviewed the same batch from the initial discovery, but this time focused on some low fidelity prototype contextual inquiry sessions. I was able to learn about some of the design options I created, and had some very valuable feedback which helped shape the feature for the better.

The original design that we were favoring, actually got proven to be less powerful and flexible as another version which was a bigger swing. Based on feedback from real users from different backgrounds, we were able to determine the correct route.

“This version intrigues me. I think if we had this version, we would have no need for the current test bot, and I can see how this could be used in the future for upcoming bot types.”

UX Metrics

How did I know I was successful or not once the project was released?

We decided to track the usage of the new tool vs the existing testing tool, which was barely used due to discoverability issues.

We also tracked the usage of the new detail mode to see out of how many testing sessions was the detail view used.

We also decided to see what percentage of clients used the new testing framework in 30 days after release.

Results

This feature set was way past due for our clients, and was met with extreme enthusiasm. We shattered all of the metrics we were tracking, and received some feedback as well in ways to improve it. We did miss a couple areas, such as testing for bot drafts, but this will be done in the next release.